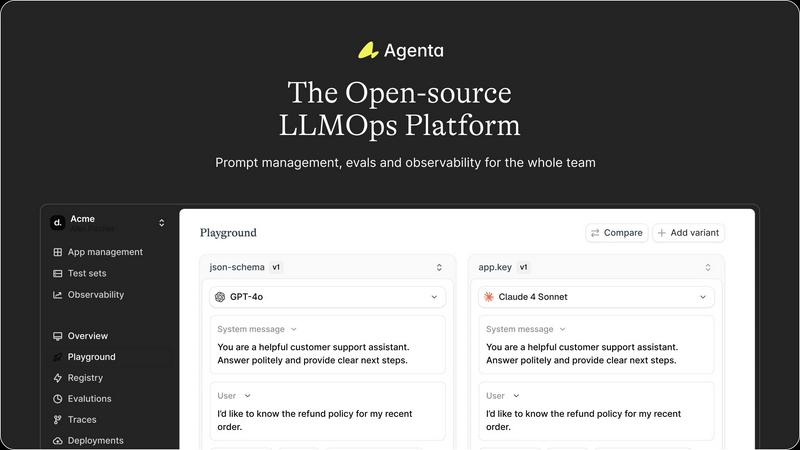

Agenta

Discover how Agenta's open-source platform helps teams build and manage reliable LLM applications together.

Visit

About Agenta

What if building with large language models didn't have to feel like navigating a labyrinth blindfolded? Agenta emerges as a beacon for AI teams lost in the chaos of modern LLM development. It's an open-source LLMOps platform designed to transform the unpredictable art of prompt engineering into a reliable, collaborative science. At its core, Agenta solves a critical dilemma: while LLMs are inherently stochastic, the processes teams use to manage them shouldn't be. It replaces scattered prompts in Slack, siloed work between developers and domain experts, and risky "vibe testing" with a centralized, structured workflow. The platform is built for cross-functional teams—engineers, product managers, and subject matter experts—who need to experiment swiftly, evaluate rigorously, and debug confidently. Its main value proposition is acting as the single source of truth for the entire LLM application lifecycle, integrating prompt management, automated evaluation, and production observability into one cohesive environment. By providing the infrastructure for LLMOps best practices, Agenta empowers teams to ship robust, high-performing AI applications faster, turning guesswork into evidence-based development.

Features of Agenta

Unified Experimentation Playground

Dive into a collaborative workspace where you can compare different prompts, parameters, and foundation models side-by-side in real-time. This feature eliminates the need to juggle multiple tabs or scripts, allowing your entire team to iterate rapidly. It maintains a complete version history of every change, so you can track what worked, what didn't, and seamlessly revert if needed. Crucially, it's model-agnostic, freeing you from vendor lock-in and letting you test the best model for the job from any provider.

Automated and Integrated Evaluation Framework

Replace subjective hunches with concrete data using Agenta's powerful evaluation system. It allows you to create a systematic, repeatable process for validating every change before it reaches users. You can integrate a wide array of evaluators, including LLM-as-a-judge setups, built-in metrics, or your own custom code. This enables you to evaluate not just final outputs but the entire reasoning trace of complex agents, providing deep insights into where performance succeeds or fails.

Production Observability & Debugging

Gain unparalleled visibility into your live AI applications. Agenta traces every single request, helping you pinpoint the exact failure points in complex chains or agentic workflows. You can annotate these traces with your team or gather direct feedback from end-users. The most powerful aspect is the ability to turn any problematic production trace into a test case with a single click, creating a tight feedback loop that continuously improves your system's reliability.

Cross-Functional Collaboration Hub

Break down the walls between technical and non-technical team members. Agenta provides a safe, intuitive UI for domain experts and product managers to directly edit prompts, run experiments, and review evaluation results without writing code. This democratizes the development process, ensuring the people with the deepest subject matter expertise can directly influence and improve the AI's performance, all while maintaining governance and version control.

Use Cases of Agenta

Developing and Refining Customer Support Agents

Teams building AI-powered support chatbots can use Agenta to systematically test different response tones, information retrieval strategies, and escalation protocols. Product managers and support leads can collaborate with engineers in the playground to craft ideal responses, then use automated evaluations to ensure the agent stays helpful, accurate, and on-brand before any deployment, drastically reducing the risk of public failures.

Building Reliable Content Generation Workflows

Marketing and content teams can leverage Agenta to manage prompts for generating blog posts, social media copy, or product descriptions. They can compare outputs from different models like GPT-4, Claude, or Llama, evaluate for brand voice adherence and SEO quality, and store winning prompt versions. When a generated piece misses the mark, they can debug the trace to see if the issue was in the instruction, the context, or the model itself.

Creating Evaluated Data Extraction Pipelines

For teams transforming unstructured documents into structured data, Agenta is indispensable. Developers can experiment with prompts to improve extraction accuracy from invoices, contracts, or forms. They can then create rigorous evaluation suites that check for field-level correctness and consistency, moving beyond simple LLM judgments to custom validators. Observability traces help debug complex extractions that span multiple document pages or sections.

Managing Multi-Step Analytical and Research Agents

When building advanced agents that perform research, analysis, and summarization, Agenta's full-trace evaluation and observability become critical. Teams can observe the agent's step-by-step reasoning, identify where it gets derailed or makes poor web search choices, and save those failure traces as tests. This allows for iterative refinement of the agent's planning and tool-use logic in a controlled, evidence-based manner.

Frequently Asked Questions

Is Agenta really open-source?

Yes, Agenta is a genuinely open-source platform. The core codebase is publicly available on GitHub, where you can review the code, contribute to its development, and self-host the entire platform. This open approach ensures transparency, avoids vendor lock-in, and allows the tool to evolve with direct input from the community of AI builders who use it daily.

How does Agenta integrate with existing AI frameworks?

Agenta is designed for seamless integration into your current stack. It works natively with popular frameworks like LangChain and LlamaIndex, and is compatible with any model provider (OpenAI, Anthropic, Cohere, open-source models, etc.). You can typically integrate Agenta's SDK with a few lines of code, allowing you to add observability, experimentation, and evaluation capabilities without rewriting your application.

Can non-technical team members really use Agenta safely?

Absolutely. A key design goal of Agenta is to bridge the gap between technical and non-technical roles. The platform provides a user-friendly web interface where domain experts and product managers can access the prompt playground, modify text-based prompts, compare outputs, and view evaluation results—all without needing to touch code or use the command line. Permissions and version history ensure changes are controlled and reversible.

What kind of evaluations can I run on the platform?

Agenta supports a highly flexible evaluation ecosystem. You can use LLM-as-a-judge setups where a more powerful model scores outputs, utilize built-in evaluators for metrics like correctness or similarity, or write and integrate your own custom evaluation functions in Python for domain-specific checks. This allows you to evaluate everything from simple Q&A accuracy to the nuanced quality of a creative writing assistant.

Pricing of Agenta

Based on the provided context, specific pricing plans, tiers, or costs are not detailed. The website includes a "Pricing" page link and a "Book a demo" call-to-action, which suggests that pricing information is available directly from the Agenta team. For the most accurate and current information regarding self-hosted vs. cloud offerings, enterprise plans, or any free tiers, it is recommended to select the "Pricing" option on their official website or use the "Book a demo" feature to speak with their sales team.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs